|

|

BMe Research Grant |

|

Doctoral School of Electrical Engineering

BME-VIK, Deptartment of Networked System and Services

Laboratory of Acoustics

Supervisor: Dr. Fiala Péter

Wave Field Synthesis without aliasing phenomena

Introducing the research area

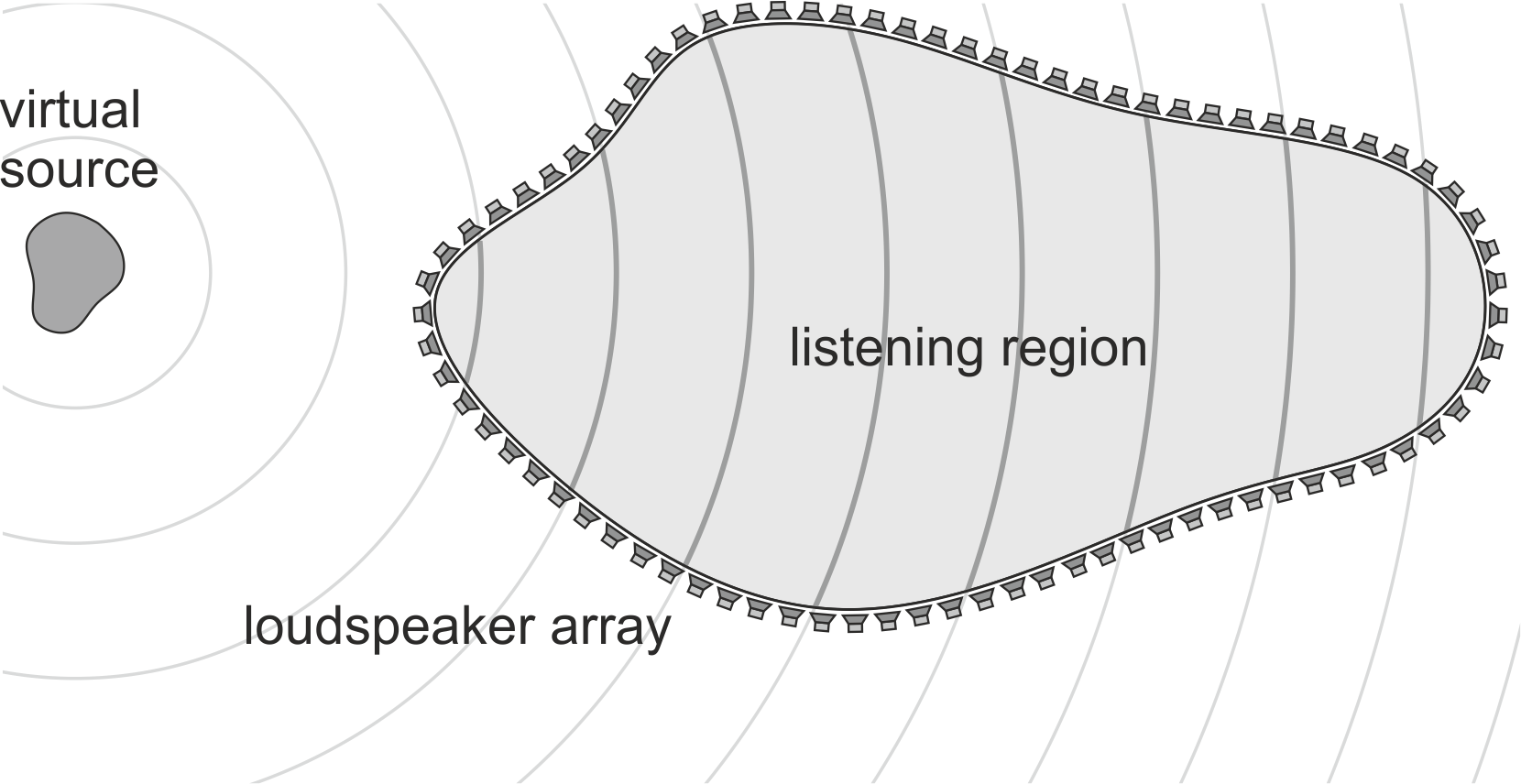

Wave Field Synthesis (WFS) is a state-of-the-art spatial audio technique, allowing the reproduction of an arbitrary target wave field over an extended listening area by applying a properly driven densely spaced loudspeaker array, enclosing the listening region. By applying the technique an „acoustic hologram” can be created, hence overcoming the common limitation of stereophonic systems (Dolby 5.1, Atmos, etc.), which can ensure perfect surround illusion only in the proximity of a single listening position, termed the sweet spot.

Figure 1.: Basic goal of Wave Field Synthesis

Brief introduction of the research place

My work has been carried out at the BUTE Laboratory of Acoustics and Studio Technologies. Our group performs research and development activity in a wide variety of fields of acoustics, including the topics of numerical acoustics, acoustic signal processing and building- and groundborne vibrations. Besides, our laboratory is well equipped for carrying out either in-situ or local, anechoic chamber measurements.

History and context of the research

Although the concept of massive multichannel sound reproduction has emerged as early as in the 1930s, the basics of Wave Field Synthesis were established in the early 90s at the Technical University of Delft [1-4]. By that time the real-time signal processing and the compact realization of systems, employing a large number of loudspeakers became available. Since then, WFS has been the subject of excessive research and development [5-7]. In recent years, commercially available WFS based sound systems have also become available.

Figure 2.: The IOSONO system in the Mann’s Chinese Theater in Los Angeles, one of the commercially available WFS systems

I started my current research concerning the theoretical foundations of WFS in 2009 as an MSc student. With my treatise on the application of the technique in reverberant rooms I won 1st prize and 3rd prize in the Institutional and National Scientific Student’s Conferences, respectively besides winning several thesis grants with my MSc thesis. My early doctoral research focused mainly on the synthesis of sound fields, generated by moving sources [FG1-3]. With my later work I significantly contributed to the development of WFS by deriving a novel unified Wave Field Synthesis theory [FG4,FG5]. A part of this latter research was supported by the New National Excellence Program in 2017. My theoretical framework has been the basis of several researches, carried out by prominent international research groups [8,9], and by now it has been generally accepted by the WFS research community [10]. Also, my method is currently under implementation into an open source WFS MATLAB toolbox.

The research goals, open questions

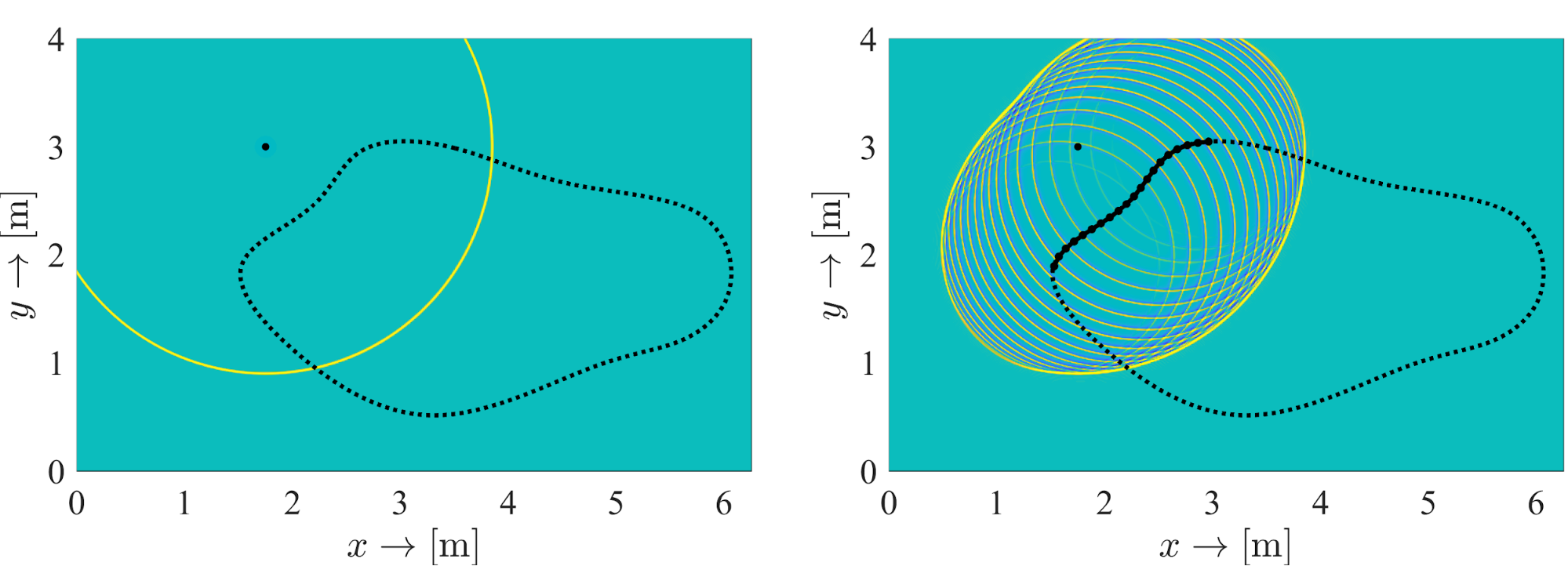

My theoretical framework allows the analytical description and compensation of several secondary artifacts of Wave Field Synthesis. These artifacts emerge from the the violation of the ideal physical model in practical applications: instead of applying the theoretical continuous distribution of acoustic point sources, in practice an array of loudspeakers set in discrete positions with a non-zero spacing distance was implemented. This leads to the clearly audible distortion, colouration of the reproduced wave field. This phenomena is termed as spatial aliasing and can be interpreted as the presence of secondary spherical wavefronts following the intended wavefront, originating from the individual loudspeakers [11].

Figure 3.: Aliasing artifacts in Wave Field Synthesis in case of reproducing a point source with impulse excitation. The left figure presents the target wave field, while the right figure shows the reproduced field with strong aliasing waves. The position of loudspeakers, used for WFS is denoted by black dashed line.

The goal of my present research is to describe and eliminate these artifacts by applying the theoretical framework I developed. During my investigations I strive to identify the analytical tools capable for the description of spatial aliasing, review and determine the possibilities to avoid them, and how the elimination affects the intended virtual wave field.

Methods

A frequently used method to describe the effects of the discrete loudspeaker ensemble is the investigation of the loudspeaker driving signals and the reproduced wave field in the spatial-frequency domain (i.e. their spatial Fourier transforms) [6]. In my work, I apply the so called stationary phase approximation for the asymptotical evaluation of the involved spectral integrals. The method allows the identification of the loudspeaker elements, which contribute to the spatial aliasing waves at a given angular (temporal) frequency. By muting these loudspeakers the aliasing artifacts may be avoided, obviously at the cost of altering/distorting the intended wavefront as well.

The developed methodology is verified via numeric simulations in MATLAB environment. As a further step, the method can be also tested on a real-life WFS system, installed in the Acoustic Laboratory of University of Rostock. This same system is also suitable for virtual testing, since a measured HRTF database is available, allowing binaural rendering to headphones [12].

It is important to note that an alternative approach exists for the description of spatial aliasing. Simultaneously with my work, a research is being carried out at the Rostock University based on my theoretical WFS framework. Their approach describes spatial aliasing phenomena merely in the spatial domain by the asymptotic evaluation of convolutional integrals describing the synthesized field. For the corresponding journal paper before submission I am considered as a second author [13].

Results

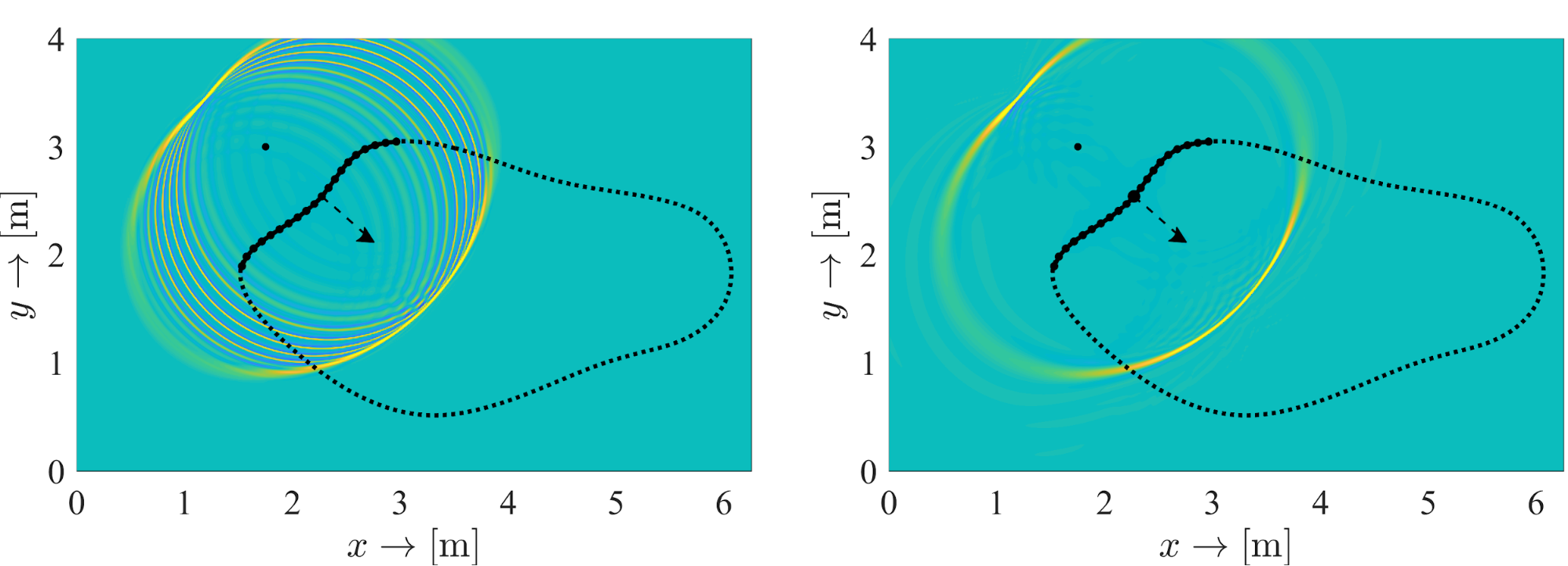

Applying the above given method, i.e. the asymptotic evaluation of spectral integrals, I gave a simple analytic description on the sources of aliasing phenomena at a given frequency. The description enables us to define an upper limit frequency for each loudspeaker element, above which the speaker serves as a source of spatial aliasing. By bandlimiting the loudspeaker driving signal to this spatial aliasing frequency, aliasing artifacts may be eliminated. Hence, I gave a flexible, efficient solution for the original problem [FG6].

Figure 4.: The result of synthesis with ideal anti-aliasing filtering (left fig.) and with applying further ideally directive loudspeaker array (right fig.).

The left side of Figure 4. depicts the result of my presented approach. As it is illustrated, the application of my method results in the perfect, anti-aliased synthesis of the intended wavefront into one particular direction. This direction is denoted by dashed black arrow in the figure.

Obviously, even if an ideal anti-aliasing filtering was applied, any wavefront propagating in a different (lateral) direction is followed by aliasing components still present in the synthesized field. These artifacts can not be avoided by pre-processing the driving signal; their effects can only be eliminated by applying directive loudspeakers. Based on my theoretical framework, I formulated analytically the ideal loudspeaker directivity function that helps eliminate residual spatial aliasing wavefronts from the synthetized wave fields. The result of synthesis using ideally directed loudspeakers is depicted in the right side of Figure 4.

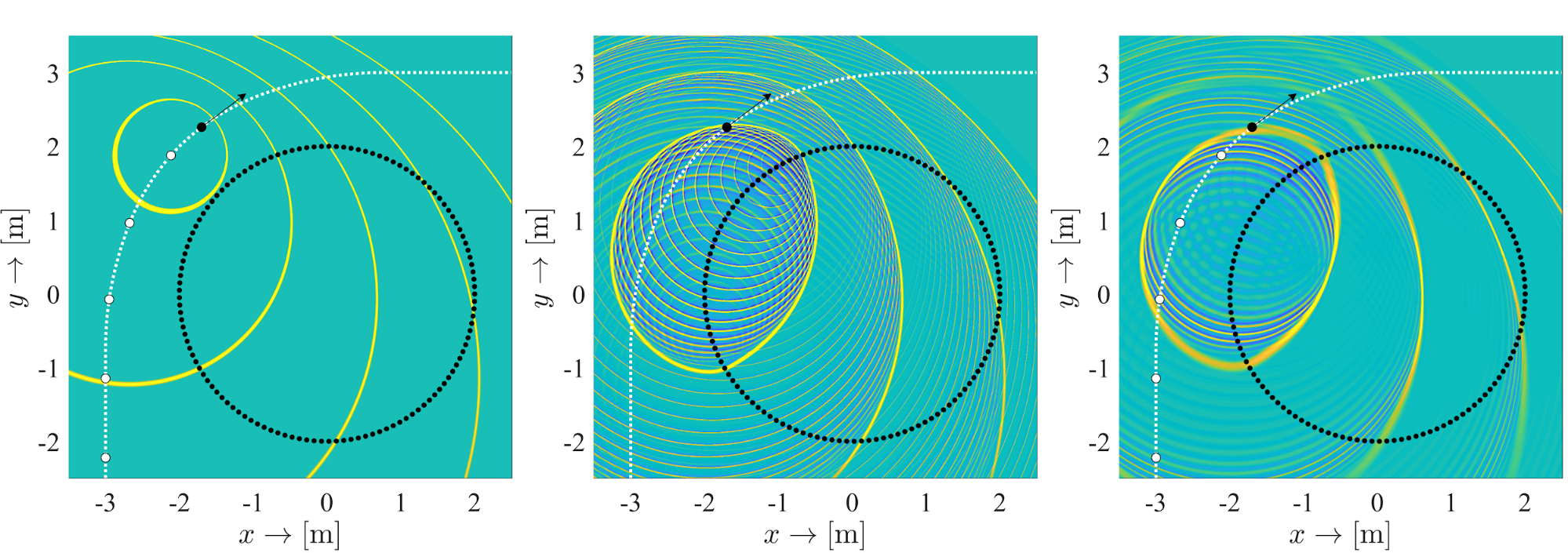

My early doctoral research focused on the synthesis of wave fields, generated by moving sound sources. In this dynamic, time-variant case the proper reconstruction of the Doppler effect is of primary importance. I published my results concerning this topic in numerous articles [FG1,FG2], with extending my unified WFS theory to the synthesis of sources, moving on an arbitrary trajectory [FG3].

In a former conference paper I demonstrated that spatial aliasing artifacts are even more enhanced in the case of synthesizing moving sources, since the aliasing wavefronts suffer a different Doppler-shift, than the intended, primary wavefront [FG8]. To avoid aliasing in the dynamic case I extended my antialiasing criterion for moving sources, resulting in the definition of a time-variant filter cut-off frequency. The result of the introduced methodology is shown in Figure 5.

Figure 5.: Synthesis of a moving source applying a circular loudspeaker array, depicting the target field (left fig.), the synthesized, aliased field (middle fig.) and synthesis with applying ideal anti-aliasing filtering (right fig.).

Expected impact and further research

Although I have already presented my early results at an international conference during the spring of 2018 [FG7,FG8], the further elaboration and publication of the topic as a journal paper is a future task. The elaboration should include the investigation of the way a particular direction of anti-aliased synthesis (dashed arrow in Figure 4.) may be controlled and the verification of the method by subjective listening tests. Since my approach offers an elegant and efficient solution for a problem existing since the establishment of the WFS technique, therefore, the concerning publications are presumably getting well-cited references for future research and implementations.

As a further research topic, the method should be investigated for the particular case of a focused virtual source, located inside the listening area.

Publications, references, links

List of corresponding own publications.

[FG1] G.Firtha and P. Fiala.“Sound Field Synthesis of Uniformly Moving Virtual Monopoles”. In: J. Audio Eng. Soc. 63.1/2 (2015), pp. 46–53

[FG2] G. Firtha and P. Fiala. “Wave Field Synthesis of Moving Sources with Retarded Stationary Phase Approximation”. In: J. Audio Eng. Soc. 63.12 (2016), pp. 958–965

[FG3] G. Firtha and P. Fiala. “Wave Field Synthesis of moving sources with arbitrary trajectory and velocity profile”. In: J. Acoust. Soc. Am. 142.2 (2017), pp. 551–560

[FG4] G. Firtha, P. Fiala, F. Schultz, and S. Spors. “Improved Referencing Schemes for 2.5D Wave Field Synthesis Driving Functions”. In: IEEE/ACM Trans. Audio, Speech, Lang. Process. 25.5 (2017), pp. 1117–1127

[FG5] G. Firtha, P. Fiala, F. Schultz, and S. Spors. “On the General Relation of Wave Field Synthesis and Spectral Division Method for Linear Arrays”. In: IEEE/ACM Trans. Audio, Speech, Lang. Process. (2018). Submitted for peer-review

[FG6] G. Firtha and P. Fiala. “Spatial Aliasing and Loudspeaker Directivity in Unified Wave Field Synthesis Theory”. In: 44th Annual Conference on Acoustics (DAGA). Munich, Germany, Mar. 2018

[FG7] G. Firtha and P. Fiala. “Theory and Implementation of 2.5D WFS of moving sources with arbitrary trajectory”. In: 44th Annual Conference on Acoustics (DAGA). Munich, Germany, 2018

[FG8] G. Firtha and P. Fiala. “Investigation of Spatial Aliasing Artifacts of Wave Field Synthesis for the Reproduction of Moving Virtual Point Sources”. In: 42nd German Annual Conference on Acoustics (DAGA). Aachen, Germany, 2016

Table of links.

https://en.wikipedia.org/wiki/Wave_field_synthesis

https://en.wikipedia.org/wiki/Sweet_spot_(acoustics)

https://index.hu/tudomany/2015/03/03/bme_suketszoba_csend_zaj_akusztika/

http://www.iosono-sound.com/company/technology/

http://www.hte.hu/hte-diplomaterv-szakdolgozat-palyazat

https://www.bme.hu/unkp-osztondijasok-2017-18

http://sfstoolbox.org/en/latest/

https://en.wikipedia.org/wiki/Stationary_phase_approximation

https://en.wikipedia.org/wiki/Head-related_transfer_function

List of references.

[1] A. J. Berkhout, Diemer de Vries and P. Vogel. “Wave Front Synthesis: A New Direction in Electroacoustics”. In: Proc. of the 93rd Audio Eng. Soc. Convention. San Francisco, USA, 1992

[2] A.J. Berkhout, D. de Vries and P. Vogel. “Acoustic Control by wave field synthesis”.

In: J. Acoust. Soc. Am. 93.5 (1993), pp. 2764–2778

[3] E.W. Start. “Direct sound enhancement by wave field synthesis”. PhD thesis. Delft

University of Technology, 1997

[4] E.N.C. Verheijen. “Sound Reproduction by Wave Field Synthesis”. PhD thesis. Delft

University of Technology, 1997

[5] S. Spors, R. Rabenstein and J. Ahrens. “The Theory of Wave Field Synthesis

Revisited”. In: Proc. of the 124th Audio Eng. Soc. Convention. Amsterdam, The Netherlands, 2008

[6] J. Ahrens. Analytic Methods of Sound Field Synthesis. 1st. Berlin: Springer, 2012

[7] H. Wierstorf. “Perceptual Assessment of Sound Field Synthesis”. PhD thesis. Tech-

nischen Universitat Berlin, 2014

[8] Winter, F., Ahrens, J. and Spors, S.: “A Geometric Model for Spatial Aliasing in Wave Field Synthesis.” In: 44th German Annual Conference on Acoustics (DAGA). Munich, Germany, 2018

[9] F. Schultz, G. Firtha, P. Fiala and S. Spors. “Wave Field Synthesis Driving Functions for Large-Scale Sound Reinforcement Using Line Source Arrays”. In: Proc. of the 142nd Audio Eng. Soc. Convention. Berlin, Germany, 2017

[10] W. Zhang, P. N. Samarasinghe, H. Chen, and T. D. Abhayapala. “Surround by Sound: A Review of Spatial Audio Recording and Reproduction”. In: Applied Sciences 7.5

[11] S. Spors and J. Ahrens. “Spatial Sampling Artifacts of Wave Field Synthesis for the Reproduction of Virtual Point Sources”. In: Proc. of the 126nd Audio Eng. Soc. Convention, Munich, Germany, 2009

[12] V. Erbes, M. Geier, S. Weinzierl and S. Spors. “Database of single-channel and binaural room impulse responses of a 64-channel loudspeaker array”. In: Proc. of the 138th Audio Eng. Soc. Convention. Warsaw, Poland, 2015

[13] F. Winter, G. Firtha, J. Ahrens and S. Spors. “Spatial Aliasing in 2.5D Sound Field Synthesis”. In: IEEE/ACM Trans. Audio, Speech, Lang. Process. (2018/2019). Before submisison